Quantum computers promise to achieve speeds and efficiency that even today’s best supercomputers cannot match. However, due to its inability to self-correct, the technology has not experienced considerable scale-up and commercialization. Unlike conventional computers, quantum computers cannot fix faults by repeatedly copying encoded data. Scientists had to devise a new method.

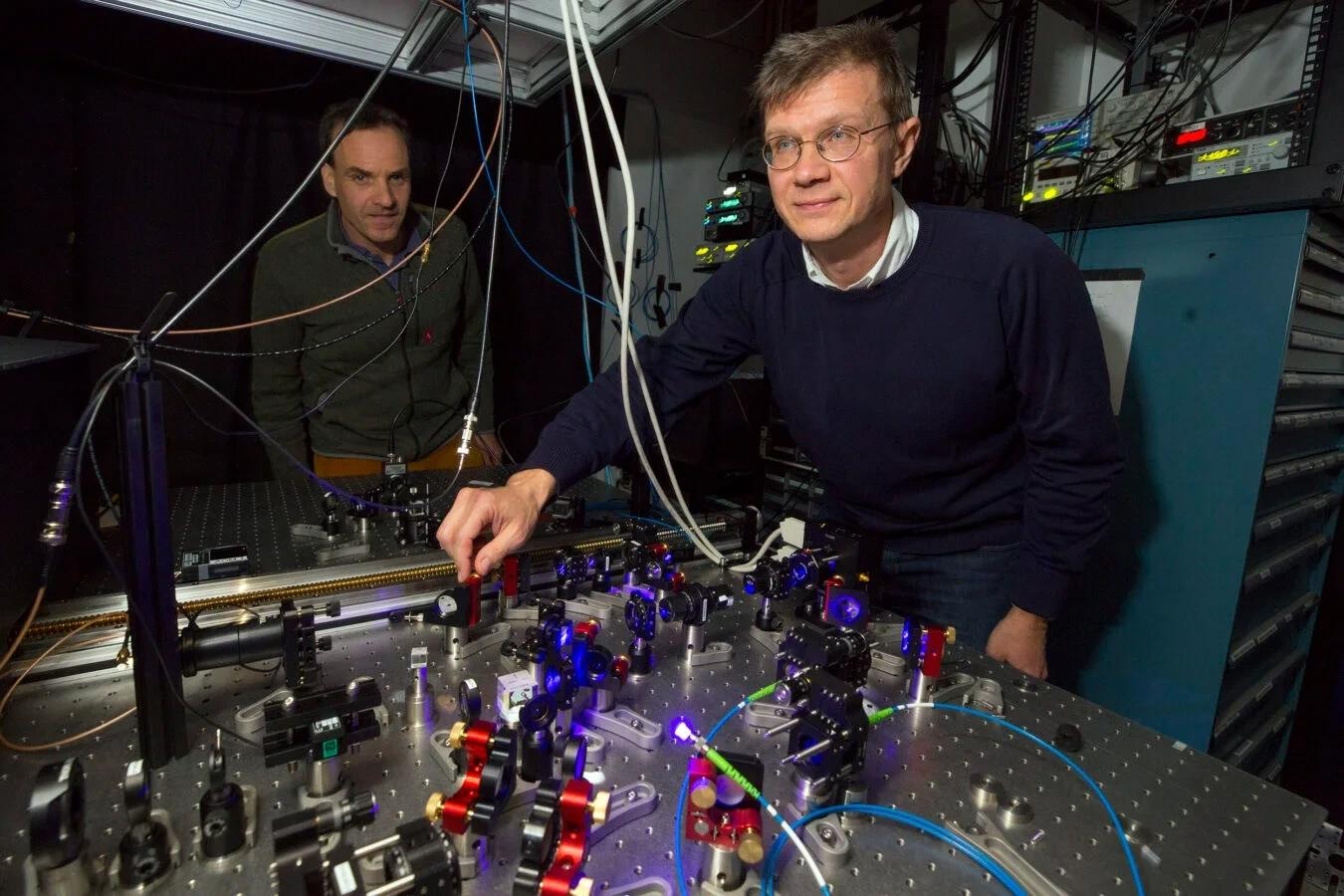

Harvard physicists Mikhail Lukin (foreground) and Markus Greiner work with a quantum simulator. Image Credit: Jon Chase/Harvard Staff Photographer

A new study published in Nature demonstrates the potential of a Harvard quantum computing platform to address the long-standing challenge of quantum error correction.

Mikhail Lukin, the Joshua and Beth Friedman University Professor of Physics and co-director of the Harvard Quantum Initiative, led the Harvard team. The Nature study was the result of a cooperation between Harvard, MIT, and Boston-based QuEra Computing. The group of Markus Greiner, the George Vasmer Leverett Professor of Physics, was also participating.

The Harvard platform, which has been under development for several years, is based on an array of very cold, laser-trapped rubidium atoms. Each atom functions as a bit, or “qubit” in the quantum realm, capable of performing extremely rapid computations.

The team’s main breakthrough is the ability of its “neutral atom array” to dynamically modify its configuration by shifting and linking atoms—a process known in physics as “entangling”—mid-computation. Two-qubit logic gates, which entangle pairs of atoms, are units of computing power.

Running a complex computation on a quantum computer necessitates the use of several gates. These gate actions, however, are notoriously error-prone, and an accumulation of faults renders the algorithm ineffective.

The team reports the near-flawless performance of its two-qubit entangling gates with extraordinarily low error rates in the new publication. They proved for the first time that they could entangle atoms with error rates of less than 0.5%.

This puts their technology’s performance on par with other leading types of quantum computing platforms, such as superconducting qubits and trapped-ion qubits, in terms of operation quality.

However, due to its enormous system sizes, efficient qubit management, and capacity to dynamically rearrange the architecture of atoms, Harvard’s method has significant advantages over its competitors.

We have established that this platform has low enough physical errors that you can actually envision large-scale, error-corrected devices based on neutral atoms. Our error rates are low enough now that if we were to group atoms together into logical qubits—where information is stored non-locally among the constituent atoms—these quantum error-corrected logical qubits could have even lower errors than the individual atoms.

Simon Evered, Study First Author, Griffin Graduate School of Arts and Sciences, Harvard University

The Harvard team’s findings were published in the same issue of Nature as additional breakthroughs led by former Harvard graduate student Jeff Thompson, who is now at Princeton University, and former Harvard postdoctoral researcher Manuel Endres, who is now at California Institute of Technology.

These breakthroughs pave the way for quantum error-corrected algorithms and large-scale quantum computing. All of this suggests that quantum computing on neutral atom arrays is living up to its full potential.

These contributions open the door for very special opportunities in scalable quantum computing and a truly exciting time for this entire field ahead.

Mikhail Lukin, Joshua and Beth Friedman University Professor, Physics, Harvard University

The US Department of Energy’s Quantum Systems Accelerator Center, the Center for Ultracold Atoms, the National Science Foundation, the Army Research Office Multidisciplinary University Research Initiative, and the DARPA Optimization with Noisy Intermediate-Scale Quantum Devices program contributed to the study.

Journal Reference

Evered, S. J., et al. (2023) High-fidelity parallel entangling gates on a neutral-atom quantum computer. Nature. doi:10.1038/s41586-023-06481-y