Apr 24 2013

Quantum physics promises faster computations but today's most advanced quantum computers operate with fewer than 20 quantum bits (qubits). To realise the technology's full potential will require much larger machines, and researchers are busy planning how to build them. A new protocol designed by researchers in Singapore and the UK lends support to a 'noisy network' approach. The work is published in Nature Communications.

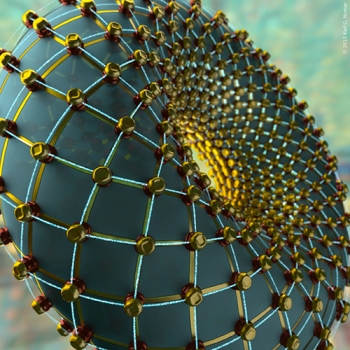

Data arranged over the surface of a doughnut-shape (a torus) can be protected by an error-correcting topological code. The new work presents an effective protocol for a toric code's stabilising steps. Image © Karl G. Nyman

Data arranged over the surface of a doughnut-shape (a torus) can be protected by an error-correcting topological code. The new work presents an effective protocol for a toric code's stabilising steps. Image © Karl G. Nyman

You can take two routes to scaling up a quantum computing architecture: build it bigger or hook up many small units into a network. The approaches are analogous to what's been done in ordinary computing: build a supercomputer like the famous chess-playing Deep Blue or connect standard desktop computers into a more powerful network.

Which to choose? "The conventional vision would be a single large array of thousands or millions of components. That kind of thing works fine for conventional computer chips but it's proved very hard when the components are the fragile and difficult to control 'qubits' for quantum processing," says Simon Benjamin, a CQT Visiting Research Associate Professor from the University of Oxford and one of the paper's authors.

But while a monolithic, large array may be challenging to build, networks face their own problem — noise. Errors are easily introduced into the qubits by measurements and environmental influences. In a network it's not only the errors in network cells that have to be accounted for, but also the noise in the connections between cells. Accepting that network connections might have an error rate of 10% or more seemed to mean that individual cells would need an error rate so low as to be impossible with current technology.

In their paper, Simon and co-authors Ying Li from CQT and Naomi Nickerson from Imperial College London, both PhD students, present a network protocol that raises the permissible intra-cell error rate in such a noisy network from around 0.1% up to 0.825%. This approximately matches the error rate that can be tolerated in a monolithic architecture - and should be achievable experimentally. "Surprisingly the whole thing will work just as well as that single giant pristine array would," says Simon.

The protocol is implemented in a network consisting of cells of four or five quantum bits. One bit stores the data and the other 'ancilla' bits help to protect it. The overall structure of the network is a torus (see illustration), with cells connected like they are arranged around a doughnut-shaped surface. This allows the use of the well-established 'toric topological code', which spreads information over the network to make it resistant to errors. The toric code is stabilised by protocols performed on sets of four neighbouring cells.

The contribution by Simon, Ying and Naomi is to design a new protocol for the toric code's stabilising steps. They come up with three variants that offer increasing error tolerance in exchange for taking longer or requiring an extra bit.

Ying, who submitted his thesis just weeks before this paper's publication, says "I spent my whole PhD trying to work out such a protocol." His thesis lays out the groundwork but doesn't include the new result. "By the time this idea came out my thesis was nearly complete!" says Ying, who will be applying for postdocs positions.

Showing that the intra-cell error rate can go above 0.8% is a promising step, but the team point out that they have only considered error rates in data storage, not in computation. It's generally accepted that computation should similarly benefit, but this needs to be checked.

Ultimately the decision about whether to build bigger or to network will also depend on what type of quantum bit the computer uses. Some systems, such as solid-state quantum bits built from superconducting circuits, may lend themselves more naturally to large-scale arrays. Other systems, such as trapped ions or nitrogen-vacancy centers in diamond, may be more amenable to networking. It's good to have options.