Mar 16 2013

To make the most precise measurement yet of the Cosmic Microwave Background (CMB) – the remnant radiation from the big bang – the European Space Agency's (ESA's) Planck satellite mission has been collecting trillions of observations of the sky since the summer of 2009.

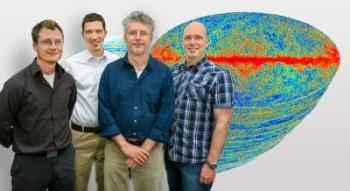

This image shows, from left: Reijo Keskitalo, Aaron Collier, Julian Borrill, and Ted Kisner, Computational Cosmology Center, with a few of the many thousands of simulations they worked to create for Planck Full Focal Plane 6.

This image shows, from left: Reijo Keskitalo, Aaron Collier, Julian Borrill, and Ted Kisner, Computational Cosmology Center, with a few of the many thousands of simulations they worked to create for Planck Full Focal Plane 6.

On March 21, 2013, ESA and NASA, a major partner in Planck, will release preliminary cosmology results based on Planck's first 15 months of data. The results have required the intense creative efforts of a large international collaboration, with significant participation by the U.S. Planck Team based at NASA's Jet Propulsion Laboratory (JPL).

Strength in data analysis is a major U.S. contribution, including the resources of the U.S. Department of Energy's National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory and the expertise of scientists in Berkeley Lab's Computational Cosmology Center (C3).

"NERSC supports the entire international Planck effort," says Julian Borrill of the Computational Research Division (CRD), who cofounded C3 in 2007 to bring together scientists from CRD and the Lab's Physics Division. "Planck was given an unprecedented multi-year allocation of computational resources in a 2007 agreement between DOE and NASA, which has so far amounted to tens of millions of hours of massively parallel processing, plus the necessary data-storage and data-transfer resources."

JPL's Charles Lawrence, Planck Project Scientist and leader of the U.S. team, says that "without the exemplary interagency cooperation between NASA and DOE, Planck would not be doing the science it's doing today."

Glen Crawford, Division Director for High Energy Physics Research and Technology within DOE's Office of Science, says, "The computational expertise and resources applied to Planck are an essential contribution to the most detailed look yet at the cosmic microwave background radiation, a foundation stone of modern cosmology."

Massive simulations crucial to understanding real data

The cosmological signal in the CMB data set is tiny, and separating it from the overwhelming instrument noise and astrophysical foregrounds requires enormous data sets – Planck's 72 detectors gather 10,000 samples per second as they sweep over the sky - and exquisitely precise analyses.

"The sheer volume of the Planck data, with about a trillion observations of a billion points on the sky, means that the techniques of exact analysis we used in the past for the data from balloon flights are no longer tractable," says Borrill. "Instead we have to use approximate methods, and because they're approximate, we have to worry about their possible uncertainties and biases."

The only way to be sure of the Planck analysis is to compare it with a huge suite of Monte Carlo simulations, long known to be the most challenging aspect of the computation. In preparation, Borrill's group at C3 has gradually built up their simulation capability for over a decade, tuning it to each new generation of NERSC supercomputers. The result is a suite of massively parallel codes running on NERSC's 150,000-core Cray XE6 Hopper.

Planck's first cosmology results are supported by the sixth round of simulations of Planck's full focal plane (FFP6), which includes data from all the detectors of both the high- and low-frequency instruments. Previous simulations were mainly intended to validate and verify Planck's analysis pipelines, but FFP6 simulations are aimed at quantifying uncertainties and correcting biases in the analysis of the real data.

To minimize statistical uncertainties, Planck needs the largest possible suite of simulations. FFP6 consists of 1,000 synthetic realizations of the first 15 months of Planck observations, each of which is then analyzed just like the real data. The result – 250,000 maps of the Planck sky - constitutes the largest CMB Monte Carlo set ever fielded, but is only 10 percent of Planck's ultimate goal.

"The more realizations we can produce, the smaller the uncertainty," Borrill says. FFP6's 1,000 realizations allow Planck to reduce the statistical uncertainty to the three-percent level; in 2014 and 2015, Planck's full-mission data releases will aim for one-percent statistical uncertainties, requiring 10,000 simulations.

Interagency cooperation key to success

For over a decade, some 100 Planck data analysts from a dozen countries on three continents have converged at NERSC, whose world-leading high-performance computing capability, open-access policies, and history of support for CMB analysis made it a clear choice for Planck.

So crucial is NERSC to Planck that in 2007 NASA and DOE negotiated a formal interagency agreement that guaranteed Planck access to NERSC for the duration of its mission. With this security, the US Planck Team was able to base its dedicated computational resources at NERSC and take advantage of NERSC's global file-system capability and systems administration expertise. The longstanding relationship between Planck and NERSC has proved mutually beneficial.

"Planck has been an enthusiastic and demanding tester of new NERSC services, procedures, and computing systems before their general release, helping to find and fix problems early," says JPL's Lawrence. "At the same time, the NERSC staff – from the Director's office to the operations room – have gone the extra mile for Planck, whether providing enhanced priority for mission-critical computations or instantly responding to urgent calls from a working meeting in Paris at three o'clock in the morning."

Borrill says the result "is an extraordinarily productive way for the agencies to collaborate – especially when times are tight. The pooling of different areas of expertise makes Planck at NERSC a model for the next generation of multi-agency, data-intensive dark energy experiments."

Such experiments include the ground-based Mid-Scale Dark Energy Spectroscopic Instrument (MS-DESI) and Large Synoptic Survey Telescope (LSST), as well as the Wide-Field Infrared Survey Telescope (WFIRST) and Euclid satellite missions.

And there's much more to be discovered in the CMB beyond Planck. Current and future experiments include the POLARBEAR telescope in Chile's Atacama Desert, the EBEX and Spider Antarctic balloon flights, and the proposed Inflation Probe satellite, all of which will look for the faint signal of so-called B mode polarization – predicted to contain a signal due to gravity waves emitted in the microscopically early universe, which has been called "the smoking gun of cosmic inflation."

CMB scientists will have to stay on the leading edge of high-performance computing to keep up with another decade of exponential data growth. The first phase of POLARBEAR will gather 10 times as much data as Planck, for example, and increase to 100 times as much when extended to three telescopes later this decade; an Inflation Probe mission early next decade would gather 1,000 times Planck's data. Centers like NERSC, with their reliable cycle of system upgrades, are essential to handle this work.